Learning from 3 brands with phenomenal brand journalism

Here are 3 brands that have mastered the art of brand journalism, creating content that not only tells their story but is eagerly picked up by...

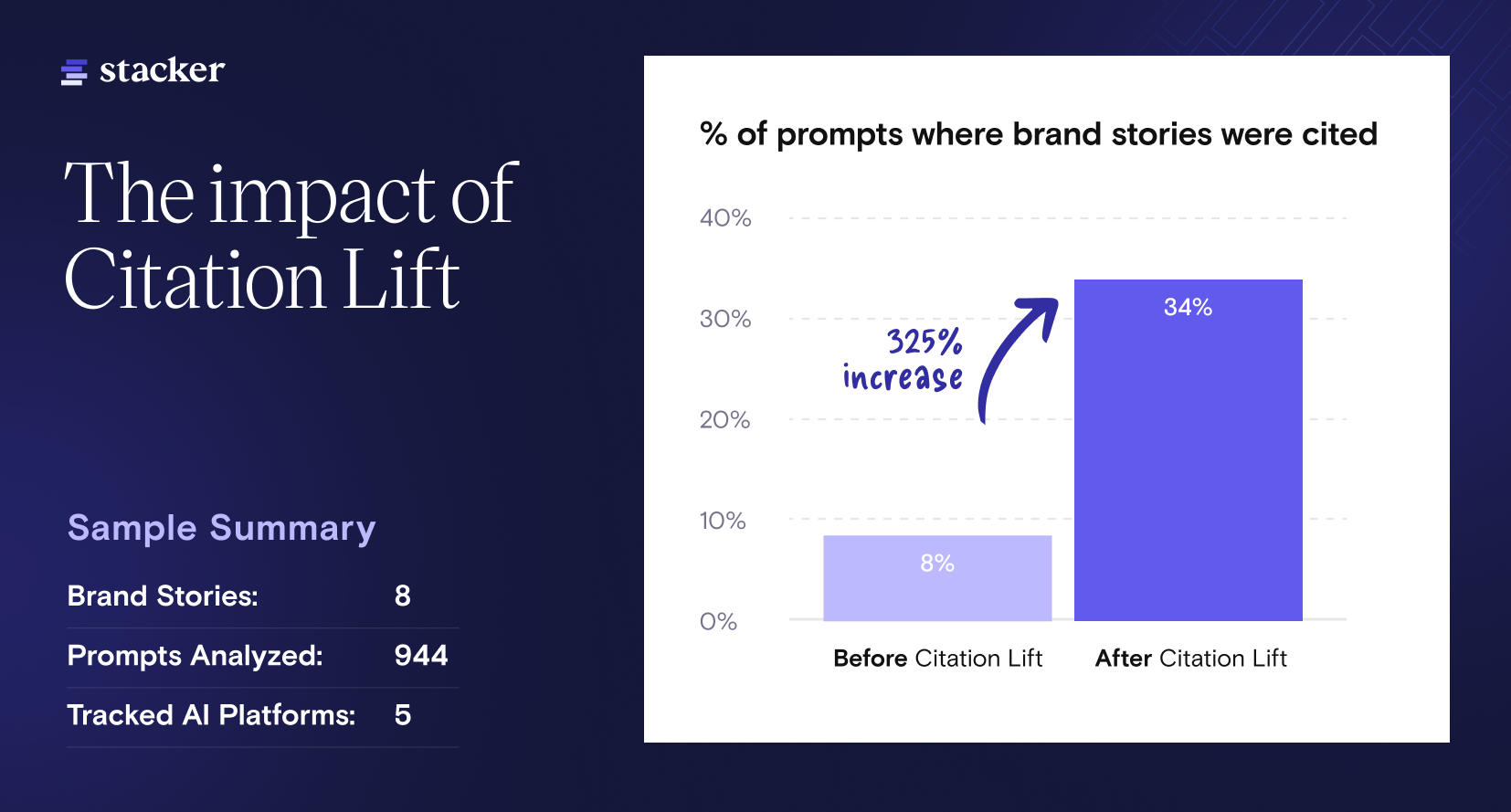

New research from Stacker and Scrunch shows how earned media distribution can increase AI citations by up to 325%.

Plenty of recent studies have examined the role of earned media in AI search, but none have explored a specific question: does distributing and republishing the same story across hundreds of trusted news sites impact how often AI models cite the work and the brand behind it? To answer this, our team at Stacker partnered with AI visibility platform Scrunch on a first-of-its-kind study.

The results point to a clear pattern: Stories republished across a diverse set of third party news outlets see significant lift in how often AI systems cite them as an authoritative source. By distributing content to a wide range of news outlets, brands were able to increase the surface area for possible citations, ultimately increasing the number of places their content was cited across LLMs.

We call this “Citation Lift.”

This impact held across platforms, industries, and prompt types. While the study is limited and there’s much more to explore, this sample gives us a meaningful early view into how earned distribution contributes to AI citations. We’ll continue to commission in-depth research on this topic in early 2026, and you can leave your email below to receive the full report when it’s published.

This study analyzed eight articles from different industries and evaluated 944 prompt–platform combinations across five leading LLMs. Distributed versions were compared against the same stories hosted only on brand domains to measure whether third-party placements influenced citation frequency.

|

The results offer an early view into how content surfaces in AI answers and how content distribution can expand the visibility footprint of brand stories across emerging AI search environments.

Marketers want to understand how AI search works, yet most research focuses on what affects large language model (LLM) appearances rather than how to create the conditions for those appearances to happen.

Traditional SEO retrieval methods, such as keywords, backlinks, and domain authority, do influence Generative Engine Optimization (GEO), but they miss a key factor shaping AI answers: how generative engines decide which sources to draw from when composing responses. Because content distribution on high-authority sites isn’t part of traditional SEO analysis, it’s rarely recognized as a meaningful driver of AI visibility.

It’s easy to track what happens on your own domain, but outside of backlink attribution, it’s much harder to see how versions of your story published on third-party sites influence visibility in AI search and contribute to building your brand authority.

Until now, there has been no clear way to measure that influence. This work provides the first structured method to understand how third-party distribution contributes to AI citations, offering an early look at how content distribution across multiple publisher domains correlates with citation frequency in AI answers.

In this work, a citation refers to any source an AI system includes when answering a prompt, whether by naming the source or linking to its website. These citations identify which URLs an LLM treats as authoritative for the underlying query.

The study examined eight stories across different industries. Each story appeared in two forms:

|

Brand version |

The original article is published on the brand’s own website. |

|

Distributed version |

The same story was distributed and republished in full across hundreds of 3rd party publishers, with canonical tag pointing back to the original version. |

To generate prompts that reflected real-world marketer intent, the team used a custom-built GPT agent. This agent produced high-value questions a brand would reasonably want to appear for based on each story’s topic. The same type of questions that real consumers might turn to AI for answers.

For example, for a story on “The Most Expensive College Towns,” the GPT generated prompts such as “Which U.S. college towns have the most expensive housing?” or “Why are college town home prices so high?.” These prompts represent realistic queries that could surface either the brand version or the syndicated versions of the article in AI-driven search.

Each prompt was then tested across five major AI platforms, which resulted in 944 prompt–platform combinations, and equated to roughly 189 unique prompts tested across all platforms.

For every prompt, the AI’s citations were categorized into three groups:

|

Category |

Definition |

What it means |

|

Brand-only citations |

The brand’s original piece is cited alone, without any co-citations of distributed versions of the piece. |

This serves as the brand’s baseline visibility metric - how many prompts they are cited in directly, without considering citation lift gained via distribution. |

|

Syndicated-only citations |

Only versions published on third-party news sites were cited. |

These citations expand the breadth of prompt coverage by capturing citations in prompts where the brand is not directly cited. |

|

Co-citations |

Both the brand’s original version and one or more distributed versions are cited for the same prompt. |

These citations expand the depth of prompt coverage by allowing for multiple citations within a single prompt. |

This structure made it possible to isolate the visibility impact driven by distribution, separate from what the brand’s standalone domain might achieve on its own.

This tells us how often these stories show up in the answers for questions people might realistically ask. Because actual search volume cannot be predicted, this dataset functions as a sample that illustrates how often a story may be cited across the many prompts we will never directly observe.

It offers an early indication of how distributed content can influence AI retrieval behavior at scale.

AI systems showed clear differences in how often they cited the brand versions compared to the distributed versions of the eight stories. The table and visual below summarize the combined results across all 944 prompt and platform combinations. Overall, the data shows that distribution created significantly more citations than the brand domains alone.

Citation Outcomes at a Glance:

|

Metric |

Result |

What It Represents |

|

Brand-only citations |

7.6% |

Baseline visibility from the brand’s own domain |

|

Syndicated-only citations |

19.2% |

Citations that only occurred due to distribution |

|

Co-citations |

8.3% |

AI citing both brand and distributed versions |

|

Total answers with citations |

~34% |

Combined visibility across all citation types |

|

Unmatched answers |

~66% |

Prompts where no version was cited |

The baseline visibility of each brand, without any external authoritative sources referencing their content, was measured through brand-only citations, which averaged 7.7% across all eight brands in the study. This indicates that AI engines did recognize these brands as at least somewhat authoritative within their categories.

To measure the net-new visibility the brands earned through the organic distribution of their content on third-party sites, we used syndicated-only citations, which represented 17.5% of responses. This means that in nearly 1 in 5 answers, AI systems recognized the versions of the story re-published on third-party news sites via earned distribution as authoritative, but did not cite the brand’s original version. This reflects a common pattern in AI search we’ve observed, where engines tend to view news outlets as more neutral and trustworthy than the brands behind the content.

Co-citations are answers where both the brand’s version of the story and a third-party site’s version were cited simultaneously. Co-citations represented 8.4% of responses, which is a meaningful signal of validation. It suggests that the AI viewed the brand and the third-party source as corroborating evidence for the same query. This reinforces the authority and trustworthiness of the story by showing that multiple independent sources support the information, effectively validating the brand as the original source of expertise and leveraging the publisher’s authority.

Total prompt coverage reached roughly 34%, meaning that when brand, syndicated-only, brand-only, and co-citations were combined, distributed stories surfaced in about one-third of all answers. This added visibility is what we call “citation lift,” or the ability to significantly expand your overall citation presence through earned media pickups. By leveraging distribution, brands increased their visibility by roughly 325% compared to brand-only results.

However, not every prompt yielded a citation, and in this study, 66% of answers did not include any. This is expected behavior, and reflects the variability in how AI systems choose sources. AI models frequently answer from their training data without linking to a live URL, or they may determine that no specific source meets their citation threshold for that query.

Citation coverage varied by topic, but the overall lift from distribution remained consistent. All eight stories saw higher visibility when syndicated versions appeared on trusted news sites, whether through syndicated-only citations, brand-only citations, or co-citations.

Real estate and health topics showed the strongest lift, with the College Town Housing story reaching 74% coverage and the Health and Wellness story reaching 58%. In both cases, third-party validation played a major role in helping AI systems treat the information as authoritative.

Other topics showed moderate but meaningful gains. Consumer, financial, and economic stories saw mixed performance, yet distributed versions consistently expanded visibility beyond what the brand domains achieved alone. Notably, the Housing Availability story reached 27% coverage despite having zero brand-only citations, which shows how essential earned media can be when neutrality or local authority matters.

This dataset offers an early look at how distributed content shows up in AI-generated answers, and the patterns are already taking shape. Three themes emerged in how AI systems pull, weigh, and cite sources:

In AI search, citations function as the new “first blue link.” They serve as credibility markers, signaling which sources a generative engine views as trustworthy for a given topic. When a model chooses to cite a brand in its answer, it is effectively identifying that brand as a reliable source. And the more often a brand is cited, the more authority it appears to hold in the eyes of AI systems.

When stories were distributed across trusted publisher domains, citation frequency rose from 8% to 34%. This lift indicates that earned distribution increases citations, and the authority of the content cited. By appearing in multiple authoritative contexts, a story generates more touch points for the model to encounter, recognize, and validate the information. In effect, distribution increases the number of signals an AI system can reference.

About 68% of prompts produced no citation at all. In many cases, AI models answer from general knowledge rather than pointing to specific sources. These gaps help contextualize the lift we observed: when citations did appear, distribution was often the factor that pushed a story into the model’s source set.

Unmatched prompts also highlight meaningful whitespace. If a query you care about isn’t pulling in your brand or your competitors, that signals an opportunity. It means the model lacks a strong, authoritative source to draw from, providing an opening your content could fill. Identifying these gaps allows your team to shape a content strategy that creates the information AI systems are actively looking for, increasing the likelihood that your brand becomes the source when no one else is.

These findings suggest what we’ve already seen and suspected with the rise of AI: LLMs are reshaping how information is discovered. Visibility no longer lives on a single URL or relies on backlinks; rather, it emerges from patterns of authority that AI systems recognize in context across the web.

What was once just figuring out how to show up on the first page of search results has now taken a different form. The changing nature of discovery now requires high-quality content, structured and organized in an AI-friendly way, strategically distributed, and consistently updated.

Understanding the patterns is key to scaling visibility and authority efforts for marketing teams. And we now have evidence that an effective earned media strategy can be a powerful tool in a brand’s GEO toolkit.

Publishing solely on owned channels limits where both larger audiences and AI models have the chance to encounter your stories. When a story appears across many trusted domains, models have more opportunities to learn from it, reference it, and cite it.

Incremental SEO improvements to blog content aren’t enough to drive meaningful growth or build tangible brand authority that will get LLMs to cite your brand content. With AI search rapidly becoming the go-to discovery engine, distribution must become part of the visibility strategy rather than a mere afterthought.

This calls for strong relationships with editorial teams, particularly when branded content is involved. Content performance can no longer be measured only by your own site metrics. It must also reflect the KPIs that matter to publishers across every location where your story lives. Your content team should incorporate these publisher goals into how success is defined and measured.

Additionally, these patterns indicate that teams evaluating AI visibility should look beyond queries and rankings. Retrieval behavior in the age of AI search is context-driven. What matters most is how widely and credibly a story exists across the web, and whether the model consistently recognizes that story as a reliable source for the underlying question. A strong presence across multiple reputable domains gives AI systems more opportunities to encounter and validate the information, which increases the likelihood of being cited.

Context-driven retrieval also highlights the need for clear, easy-to-follow content that answers questions directly and specifically. The more precise and well-structured the information is, the easier it is for AI systems to interpret and surface it as part of an answer.

Modern AI systems draw from many domains rather than relying on a single point of origin, which shifts how brands need to think about where their content appears. When a story lives only on a brand’s site, the model has just one place to encounter it. If that domain does not have strong topical authority for the query, the content may simply not register as relevant.

When that same story appears across multiple reputable publisher domains through earned distribution, the model encounters it in more contexts, which strengthens the overall signal around the information. This broad, multi-domain presence increases the likelihood that the story will surface in AI-generated answers.

Canonical tags play an important role here. AI systems analyze patterns of information rather than relying on canonical tags, but traditional search engines do. Because SEO rules tend to downgrade or devalue duplicated content, including a canonical ensures that the original brand page receives proper credit and is not penalized. And since traditional SEO signals continue to influence how AI systems assess authority, maintaining a clean canonical structure still matters in the AI era.

Taken together, this suggests that visibility in AI search is not just about optimizing a single page but about strengthening the overall footprint of the information across the ecosystem and adapting to the rules these models rely on. Consistent distribution helps reinforce those signals, improving cumulative reach, link equity, brand recall, and, in many cases, the authority of the brand’s original content.

This study represents an early, directional exploration. The scope was limited to eight stories, five platforms, and citations (rather than placement or longevity). Future work will expand to include brand-mention lift and citation persistence over time.

However, the initial signal is clear: Widespread placement on trusted news sites correlates directly with increased AI visibility.

While the mechanism of AI search will continue to evolve, the data suggests that distribution is no longer just a traffic strategy, but a fundamental component of AI visibility.

Photo Illustration by Shutterstock / Canva / Stacker

Here are 3 brands that have mastered the art of brand journalism, creating content that not only tells their story but is eagerly picked up by...

Brand journalism is a phrase thrown around a lot, but what does it actually mean? These examples will illustrate best practices and highlight the...

Industry giants like J.P. Morgan and HubSpot are investing in brand journalism and earned distribution to drive visibility and authority in AI search.