How privacy policies enveloped the world

Privacy, or the lack of it, has infiltrated every aspect of modern life. From what might be deemed the usual suspects—bank accounts, social media profiles, online purchasing behaviors—to everyday actions where one might never imagine privacy being an issue, it has become an issue. Case in point: A new household purchase of a fancy bathroom scale will prompt a person to read a lengthy and confusing legal agreement about what information this app-connected weight measurement tool will collect about them, what the company will do with it, and what (almost nonexistent) rights they have to protest.

In the last 20 years, privacy policies have gone from a once infrequent oddity to daily reality. A 2008 study estimated that it would take the average person 244 hours per year to read every privacy and usage policy they encountered on the websites they visited—and this is pre-smartphone. A more recent study from 2020 made even more concerning discoveries: A read of TikTok's privacy policy alone would take you longer than it would to read "The Communist Manifesto," and Google's privacy policy is longer than the U.S. Constitution.

Given this relatively massive commitment, it's no wonder almost no one reads privacy policies, let alone understands them, even though the average internet user is asked to agree to a policy in one form or another almost every day. The question of how we reached this threshold led Stacker to speak to several field experts to gauge the true impact of privacy policies on the average person and how the government has addressed the increased need for privacy in the connected world.

Not reading a privacy policy is not indicative of laziness or willful ignorance. Some of the experts we spoke with for this story admitted to only reading privacy policies out of professional curiosity—and even then, rarely. The proliferation of privacy policies is caused by consumer needs and an evolving legislative landscape that has many organizations reaching for compliance. But a law on the horizon may change data collection, privacy policies, and how we interact with our data.

Privacy in America

The U.S. currently has a sectoral approach to privacy. Some areas of life, such as health and credit, are heavily regulated. The Fair Credit Reporting Act largely determines how businesses dealing with credit information can handle that data. The act, which states that consumer information "cannot be provided to anyone who does not have a purpose specified in the Act," is enforced by the Federal Trade Commission. However, the Consumer Financial Protection Bureau has rule-making authority. The Health Insurance Portability and Accountability Act limits how health information can be shared. Its Privacy Rule affects "covered entities," such as health care providers, health insurance companies and clearinghouses, and business associates (e.g., companies involved in billing, claims processing, and data analysis).

"I think a lot of people would agree these are pretty sensitive categories of information," Hayley Tsukayama, a senior legislative activist at nonprofit digital rights group Electric Frontier Foundation, told Stacker. "But the laws also have their limits." The limits are that these regulations only affect certain sectors, and it may not always be clear to the average person where that limit lies. "If my doctor and I are talking, that information is protected," Tsukayama said. "[However,] if I've given [similar] information to Google through Fitbit, that information is not protected anymore."

Such conditional protections are relative to industries that, by and large, have traditionally handled a significant amount of personal information. What's missing from the U.S. legislative privacy landscape is broad protection. "Privacy is not a right in the U.S.," Tsukayama said. "It's implicit in the Fourth Amendment, and certainly I would argue that it is a right, but it's not enumerated in the U.S. in the same way as, for example, the right to freedom of expression or the right to trial by jury."

Greg Szewczyk, practice co-leader of the Privacy and Data Security Group at the law firm of Ballard Spahr, puts it a little more bluntly: "Until a few years ago, [privacy] was kind of the Wild West outside of specifically regulated industries."

Privacy for the people

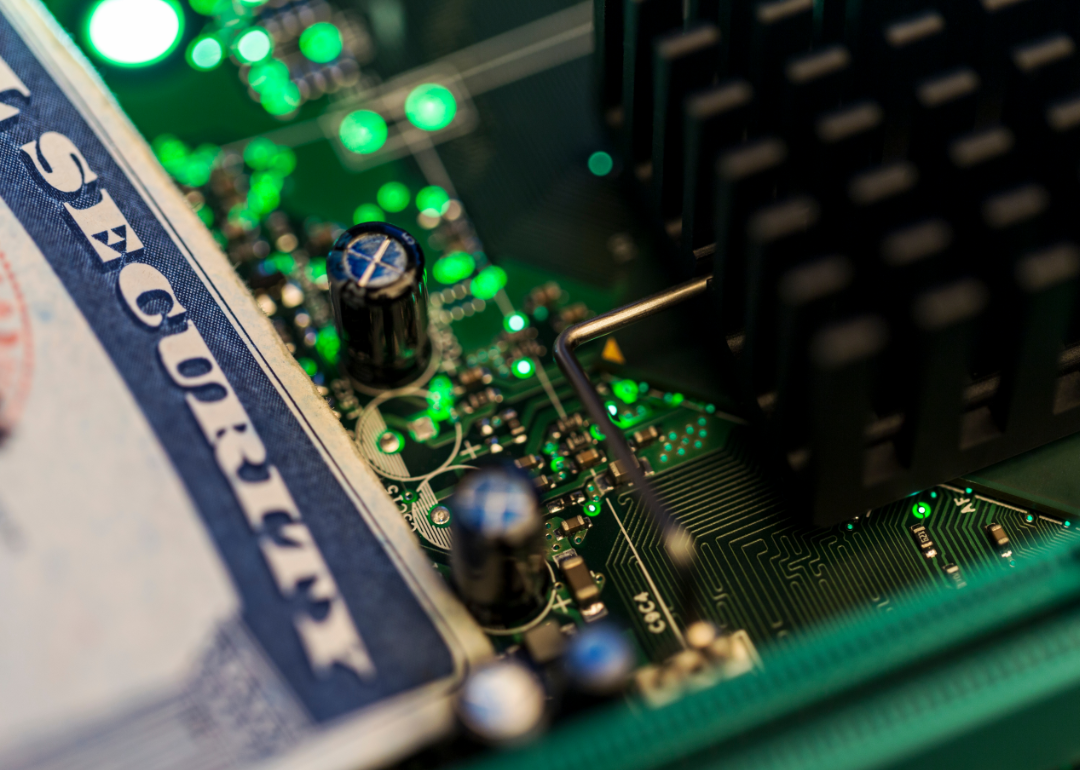

This Wild West mentality has created a frustrating experience for people. After years of companies hoovering up data indiscriminately, people have seen reams of their data—which they believed to be safe and secure—exposed. Massive data breaches involving major companies in the security, education, banking, and health sectors have exposed people's data. Information contained in email accounts and social media profiles are not immune from threats either.

The 2013 breach of Yahoo, which resulted in 3 million accounts becoming compromised, was not only one of the most egregious breaches in history, but it also demonstrated the degree to which a company might hide evidence of a breach to its network to protect its value and public image. The Yahoo breach was performed by hackers aligned with the Russian government in 2013 but was not publicly disclosed until 2016, when Verizon began acquiring Yahoo.

In June 2021, LinkedIn had data associated with some 700 million user accounts, representing approximately 90% of its user account total, posted on the dark web. The culprit of the attack threatened to sell the data after posting a large portion online. LinkedIn claimed no sensitive or personal data was exposed, but it was later discovered that email addresses, phone numbers, and gender information were hacked.

While some people view data breaches as an inconvenient cost of doing business, sentiments may be changing as it becomes apparent the data collected about people could be used for many other purposes. For instance, the 2022 Supreme Court decision that overturned Roe vs. Wade has made people keenly aware of what fertility data state governments could access, including those states where abortion is not simply being banned but prosecuted. Nonetheless, people want their data protected.

According to a 2022 McKinsey report on digital trust, 85% of people believe that knowing a company's data privacy policy is important before making a purchase, and more than half said their purchasing is contingent on knowing a company protects its customers' data. (Almost half of the respondents were willing to switch to a competitor service should data disclosure be unclear.) This same report shows the breadth of how frequently breaches occur, with nearly 60% of executives polled reporting at least one data breach in the last three years.

Risk notwithstanding, people's desire for services that can be provided by data collection does not seem to be waning. Another McKinsey study conducted in 2021 showed that more than 70% of people expect personalization from companies, and more than 3 out of 4 are frustrated when they don't receive it. People are willing to allow companies to collect their data to improve their user experience, but they also want that collection to be done responsibly. Since companies so far have done a relatively poor job, state regulations have risen to the occasion.

How privacy policies have spread

In 2004, California passed the first state privacy law, the California Online Privacy Protection Act. This legislation requires companies that collect personally identifiable information to provide a privacy policy that is not only conspicuously accessible but also meets specific requirements, such as listing third parties and providing customers a period of time to review and alter their privacy settings to change what data is allowed to be collected. While privacy policies were around before CalOPPA, it marked a turning point in making them front and center in online life. After CalOPPA, websites could no longer hide their privacy policies deep in their websites. This law is one of the drivers behind the increased number of privacy policies to which the average person must agree. Another is the rise of the Internet of Things.

As more companies add smart connectivity features to their devices—like that new family scale—they're now required to provide a privacy policy. And since CalOPPA requires that policy to be front and center, people are seeing them more and more often. To put this in perspective, the number of connected smart devices grew by more than 7.5 billion between 2015 and 2020, according to IoT Analytics. And while not every one of them requires agreeing to a privacy policy, many do. As the number of devices collecting data rises along with the number of data breaches targeting the information they collect, states have reevaluated the protections citizens need.

How legislation has changed since CalOPPA

For more than 15 years, CalOPPA was the only major privacy legislation in the U.S. That changed in 2018 when the California Consumer Privacy Act was signed into law. The law granted citizens of the state the right to know what data is being collected about them, to decline the sale of that data, and to request for their data to be deleted, among other things. The CCPA went into effect in early 2020—just in time for the country to face nationwide lockdowns and much more time spent on their devices. The law is set to expand with the passage of the California Privacy Rights Act, which creates a new regulatory agency and amends the CCPA by providing, among other rights and protections, the right to correct inaccurate information and broader opt-out rights as well. The CPRA will go into effect in 2023.

The CCPA, which started as a ballot initiative, turned out to be a bellwether. Shortly after its ratification, other states began passing similar laws. Colorado, Connecticut, Utah, and Virginia have all passed laws with similar protections. With this new rash of privacy laws, pressure is now on the federal government to act as companies begin to worry about how they'll comply with 50 separate state-level regulations.

"A federal law is preferred over a patchwork of laws," Ray Pathak, vice president of data privacy at Exterro, an information governance company, told Stacker. "From many privacy practitioners' points of view, that is the way to go." The ease of enforcing a single overarching law is likely one reason why, in June 2022, the House of Representatives introduced the American Data Privacy and Protection Act.

The ADPPA will act in many ways like state privacy laws. It will limit the types of data companies can collect and provide the right to access and even delete data collected, among other protections. The bill, introduced by Rep. Frank Pallone Jr. of New Jersey, passed out of committee but has since stalled, likely due to upcoming midterm elections turning many elected officials' attention to partisan support.

Still, with a handful of state laws going into effect in January 2023, there may be enough pressure to pass a federal law sooner rather than later—particularly since, as Constantine Karbaliotis, senior privacy advisor at Exterro, told Stacker, "The US has become the outlier. [The ADPPA] will give individuals more control over their data and make organizations more accountable for that."