Amid growing concerns about AI, people trust these sectors with it the most

This story originally appeared on Verbit and was produced and distributed in partnership with Stacker Studio.

Amid growing concerns about AI, people trust these sectors with it the most

For decades, the prospect of artificial intelligence has captivated audiences. From HAL 9000's chilling betrayal to the Terminator's relentless pursuit, popular culture has long painted AI as a technology that can both be transformative and dangerous. Until recently, however, the idea of artificial intelligence presenting any actual risks seemed hard to believe since people assumed that the technology was decades, if not centuries, away.

That changed dramatically in November 2022 when OpenAI's ChatGPT burst onto the scene. After decades of advancing at a glacial pace, powerful artificial intelligence systems suddenly looked like they were just around the corner. Some AI safety thinkers once argued that AI systems should first be developed in "air-gapped" systems, away from the internet, where they might potentially cause harm. Such suggestions seem fanciful today. Companies are now racing to incorporate generative AI—artificial intelligence that can make original content—into just about every one of their products and services.

This rapid embrace of AI has left many consumers skeptical or even fearful that the technology could be misused. Verbit examined survey data conducted by Morning Consult with the Stevens Institute of Technology to see which sectors Americans trust the most with AI.

The military and health care lead in trust with AI

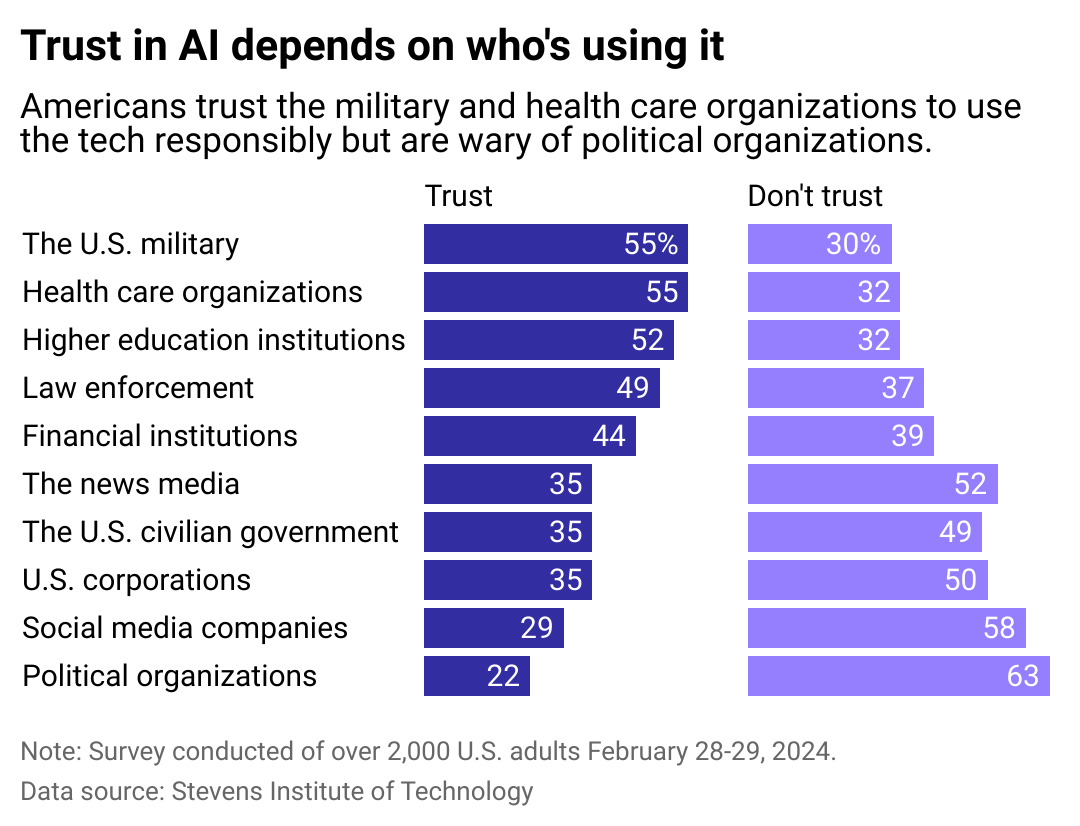

Fielded in February 2024, the Stevens Institute survey asked American adults which sectors they trust with AI. The data shows that people's opinions about AI vary greatly depending on who is using the technology.

The military and health care organizations are considered relatively trustworthy: Around 55% of adults said they trusted those organizations, reflecting public sentiment about the armed forces. Surveys from Gallup show that the military, after small businesses, is one of America's most trusted institutions. Similarly, survey respondents also had a lot of confidence in the police, with 49% saying they trusted law enforcement to use AI.

Social media companies and political organizations sit at the opposite end of the spectrum. Only 29% and 22% of Americans trust those sectors to use AI responsibly, respectively. This also mirrors Gallup's findings, which show only a minority of Americans have confidence in big tech companies and politicians. Not long ago, in 2018, political consulting firm Cambridge Analytica was embroiled in a scandal for the unauthorized use of intimate Facebook data to target voters in swing states during the 2016 presidential election.

Expanding AI is fine for mundane tasks, less so for sensitive ones

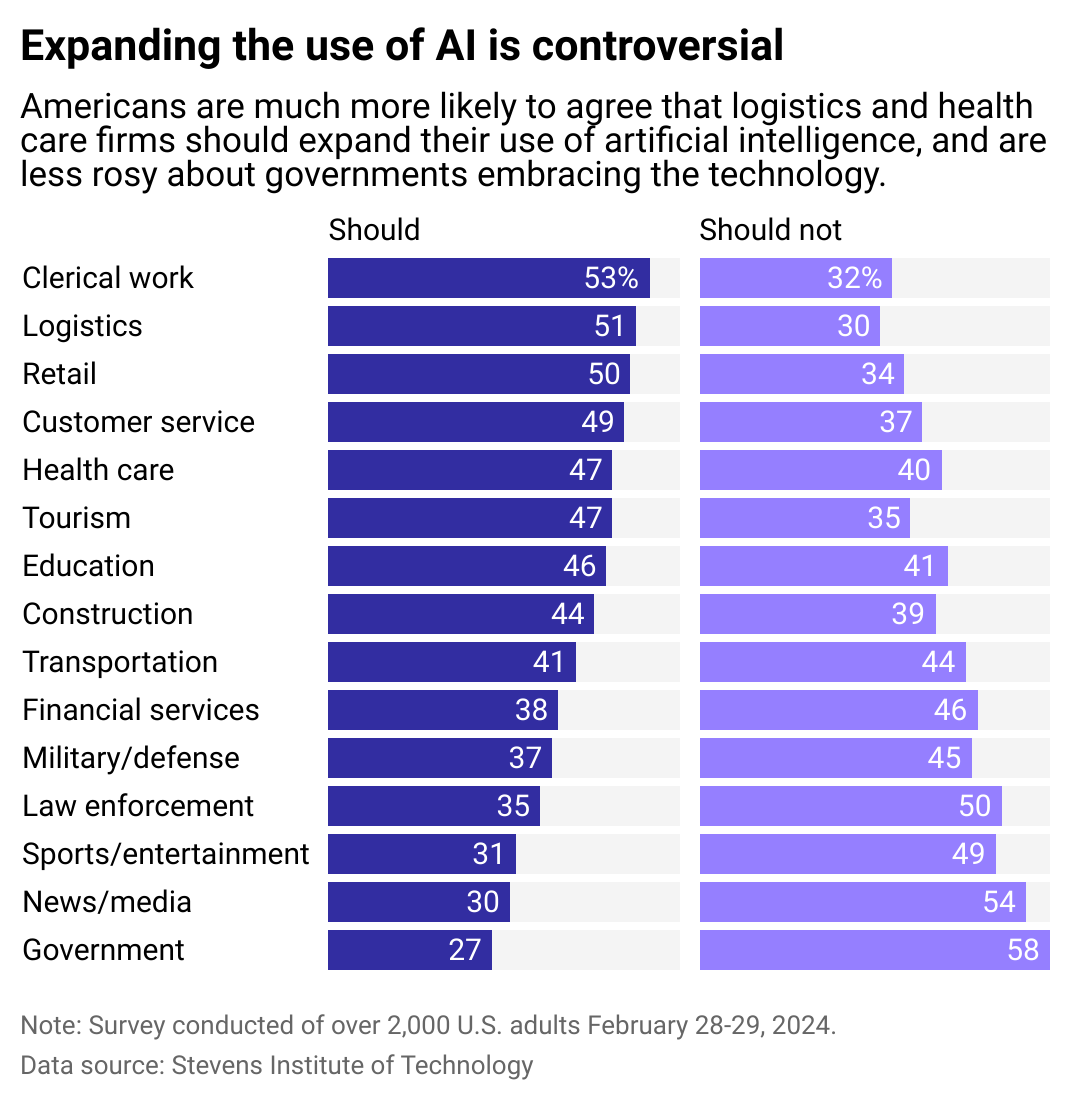

The Stevens Institute survey also asked Americans if they thought AI should be expanded in a number of sectors. Respondents were generally fine with AI in clerical work, logistics, and retail. Having machines process customer orders or robots move around boxes in a warehouse are seemingly less controversial uses for AI.

Only about one-third of respondents thought the military or law enforcement should expand their use of artificial intelligence. Further use of AI in those areas appears to be a more controversial proposition. It is easy to imagine how the expansion of such technology could be misused to target innocent people or to enforce a totalitarian state.

The sectors people least trusted to expand their use of AI are the media and the government. Less than a third of people trust these institutions to deploy more automated systems. Current AI systems have shown a remarkable ability to generate grammatically sound sentences, but falter when asked to produce factual work, such as when CNET discovered errors in 41 of 77 stories it published using an AI tool in January 2023. This skepticism of AI could reflect a broader distrust in these institutions and concerns about the potential for AI to amplify existing biases or spread misinformation

Overall, the Stevens Institute data shows that Americans have mixed feelings about the future of AI. While they are generally fine with AI for mundane tasks, they are much more wary of AI if automated errors could have serious consequences. As the technology continues to improve, more organizations will feel compelled to incorporate AI into their workflows. This data suggests that organizations must take a measured approach to AI adoption, particularly in sensitive sectors.

Story editing by Alizah Salario. Additional editing by Kelly Glass. Copy editing by Kristen Wegrzyn.